Crypto Trading Framework

arb-trops-phoenix is a fully featured Crypto Trading Framework written in python.

2021 - 2023

~908 words, 5 min read

Arb-Trops-Phoenix

Arb-Trops-Phoenix is a comprehensive Python-based cryptocurrency trading framework. Developed for traders, quants, hedge funds, and retail traders alike, it offers robust support for a wide array of strategies—ranging from market making, intermarket spreading, and arbitrage, to statistical arbitrage and other algorithm-based strategies. Its capabilities also extend to the research and analysis of market data.

Features and Highlights

-

Supports multiple exchanges and instruments.

-

Facilitates multiple order handling and execution, and the implementation of multiple trading strategies.

-

Allows for real-time computation of alpha, and offers prompt responsiveness to real-time market data, quotes, trades, orders, positions, heartbeats, and custom events.

-

Simplifies strategy development and deployment—no need to write any order handling or execution code.

-

Provides a daily updated referential for all available instruments across exchanges (spot, futures, perpetuals), inclusive of comprehensive instrument and contract details such as tick size, contract size, minimum order size, minimum increment order size, expiration date, instrument type, feed code, and exchange code.

-

Records market data to parquet files for research and analysis purposes, including l2 book, trades, and funding rates.

-

Operates on an event-driven architecture.

-

Implements a unified communication protocol for all components, facilitating easy integration of new events and components.

-

Utilizes a multi-process and multi-threaded implementation, promoting a high-performance, scalable, resilient, and robust system.

-

Offers a highly configurable and extensible architecture, with a strong emphasis on modularity and reusability.

System Architecture

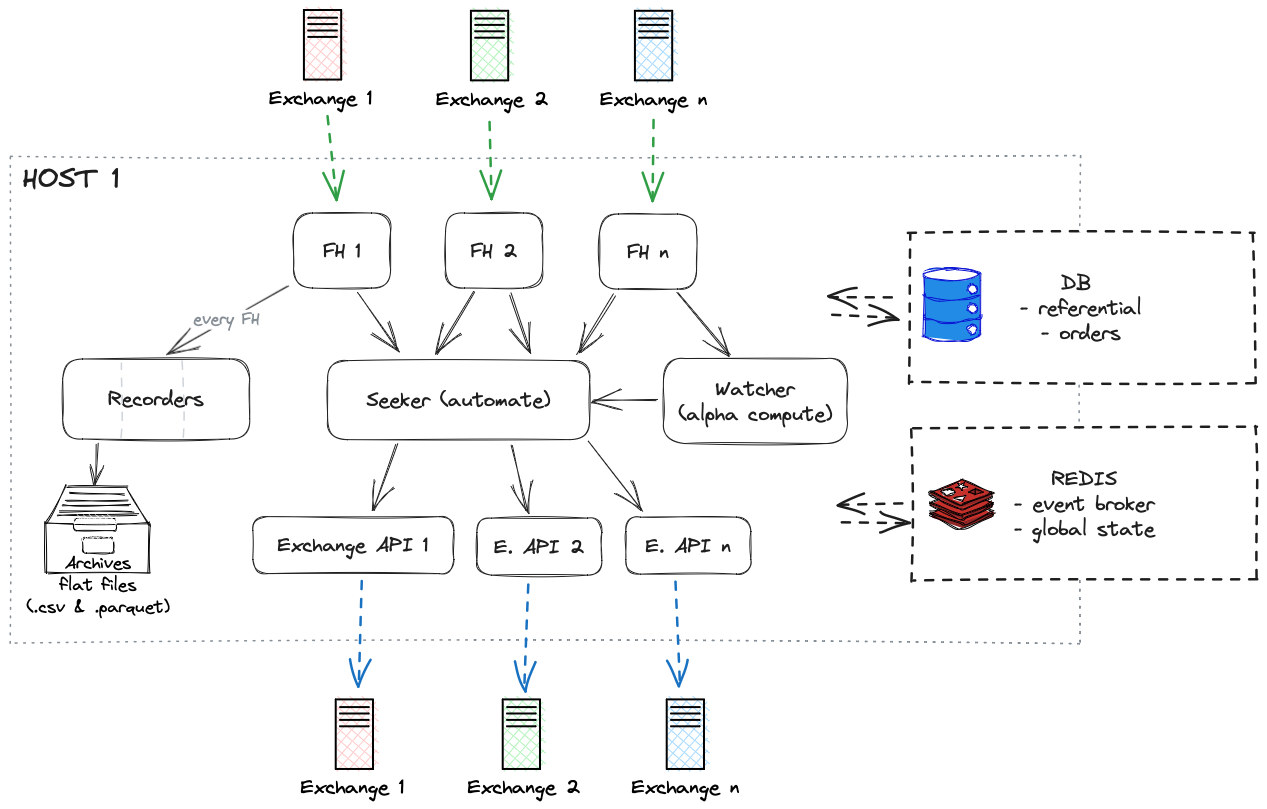

The framework, as previously discussed, operates on an event-driven model. It incorporates numerous components that interact via a standard communication protocol built on Redis pub/sub. This unified communication protocol is universally applied across all components, facilitating seamless integration of new components and events.

Legend

-

Rounded Rectangles: Represent independent processes in the diagram. Each process can operate multiple threads to listen & emit events and create separated heartbeat or long-running callbacks. Although each process can run independently on a separate machine, it's essential they remain connected via Redis for intercommunication.

-

Solid Arrows: Denote the transfer of events between components. Note that every event travels via the Redis pub/sub broker; however, including this in the diagram would cause clutter.

-

Dark Dashed Arrows: Illustrate read/write operations. Every component relies on the reference database for instrument data, and each interacts with Redis for data read/write operations or to emit/receive events.

-

Colored Dashed Arrows: Represent external service communications.

- Feed Handlers: Primarily procure market data from exchanges via Websockets.

- Exchange APIs: Transmit orders to exchanges using REST APIs.

System Components

-

Database (DB) serves as a continually updated reference of all financial instruments listed on exchanges.

- The DB encapsulates detailed specifications of each instrument, including feed code, exchange API code, instrument type, tick size, contract type, size and expiration date, minimum order size, and minimum increment order size. These specifications are leveraged by the order manager to authenticate orders before transmission to the exchange.

- Additionally, it stores a comprehensive order history, lifecycle data, and associated timestamps.

- Furthermore, it offers the ability to record custom events—such as data snapshots at the activation of a strategy—for future reference and analysis.

-

Redis functions as the system's State Handler, maintaining real-time information on orders, positions, balances, and internal statuses.

- It also acts as a communication mediator among various components, using its publish/subscribe (pub/sub) capabilities.

-

Feed Handlers are employed to procure market data from exchanges primarily via Websockets.

- Feed handlers standardize and sanitize received market data, modify states in Redis if necessary, and generate events for subscribed components.

- It's important to note that each exchange uses multiple feed handlers, each handling specific public (l2_book, trades, funding) and private (orders, fills, position updates) market data.

-

Exchange APIs enable the transmission of orders to exchanges through REST APIs.

- These APIs assess order validity, dispatch orders to exchanges, modify states in Redis if necessary, generate events for other subscribed components, and update the DB accordingly.

-

Recorders are employed to archive market data into parquet files for subsequent research and analysis, covering l2 book, trades, and funding rates.

- Each type of market data is handled by a distinct process, though they are collectively represented in the system diagram.

-

Watchers encompass most other components, receiving one or more feeds of market data.

- Watchers facilitate the real-time handling and usage of market information: quotes, trades, orders, positions, balances, etc. wether its for display, tracking or computing.

- They can calculate and generate alpha or other essential information as required.

-

Seekers, a specialized subset of Watchers, act as automated trading systems.

- They ingest market data, alpha, or other relevant information.

- As the intellectual backbone of trading strategies, Seekers determine the timing and nature of order submissions to exchanges.

Modularity and Scalability

The framework is designed to be highly modular and scalable. The component approach allows for easy integration of new components, wether they are new feed handlers, new exchange APIs, new watchers, new seekers, or any other kind of component.

It is designed to be able to run on multiple machines, as long as they can communicate with each other through redis and access the DB, any component can be run on a separate host.